Deep neural networks have enjoyed a fair bit of success in speech recognition and computer vision. The same basic approach was used for both problems: use supervised learning with a large number of labelled examples to train a big, deep network to solve the problem. This approach just works whenever the solution to the problem that we seek to solve can be represented with a deep neural network, which is often the case for reasons that I will not explain here.

But there is another way. Unsupervised learning is the idea that we can understand how the world “works” by simply passively observing it and building a rich internal mental model of it. This way, when we have a supervised learning task we wish to solve, we can consult the mental model that was learned during the unsupervised learning stage, and solve the problem much more rapidly, without using as many labels. Unsupervised learning is very appealing, because it doesn’t require any labels. Imagine: you simply get a system to observe the world for a while, and after a while we could use it to answer difficult questions about the signal — such as to determine what objects are in an image, what are their 3D shapes, and and what are their poses.

But so far there are no state of the art results that benefit use unsupervised learning. Why?

1: We don’t have the right unsupervised learning algorithm: Supervised learning algorithms work well because they directly optimize the performance measure that we care about and come with a guarantee: if the neural network is big enough and is trained on enough labelled data, the problem will be completely and utterly solved. In contrast, unsupervised learning algorithms do not have such a guarantee. Even if we used the best current unsupervised learning algorithm, and trained it on a huge neural net with a huge amount of data, we could not be confident that it would help us build high-performing systems.

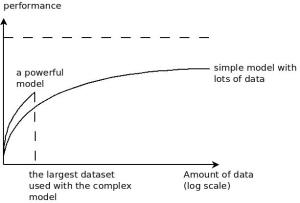

2: Whatever the unsupervised learning algorithm will do, it will necessarily be different from the objective that we care about. As the unsupervised learning algorithm doesn’t know about what the desired objective is, the only thing it can do is to try and understand as much of the signal as possible, in order to, hopefully, understand the relevant bits about the problem we care about. Thus, if it takes a big network to do really well the purely supervised problem (as is the case —- the high-performing supervised nets have 50M parameters, and they’re still growing), where all the network’s resources are dedicated to the task, it will take a much larger network to really benefit from unsupervised learning, since it attempts to solve a much harder problem that includes our supervised objective as a special case.

Thus, at this point, it is simply not clear how and why will the future unsupervised learning algorithms work.

(from http://www.kip.uni-heidelberg.de/cms/vision/projects/recent_projects/hardware_perceptron_systems/image_recognition_with_hardware_neural_networks/)

(from http://www.kip.uni-heidelberg.de/cms/vision/projects/recent_projects/hardware_perceptron_systems/image_recognition_with_hardware_neural_networks/)